I’m slowly teaching myself Swift by making a few little toy iOS apps. One of them is this “depth camera” that I use as a webcam for live streams and for taking portraits of friends at conferences.

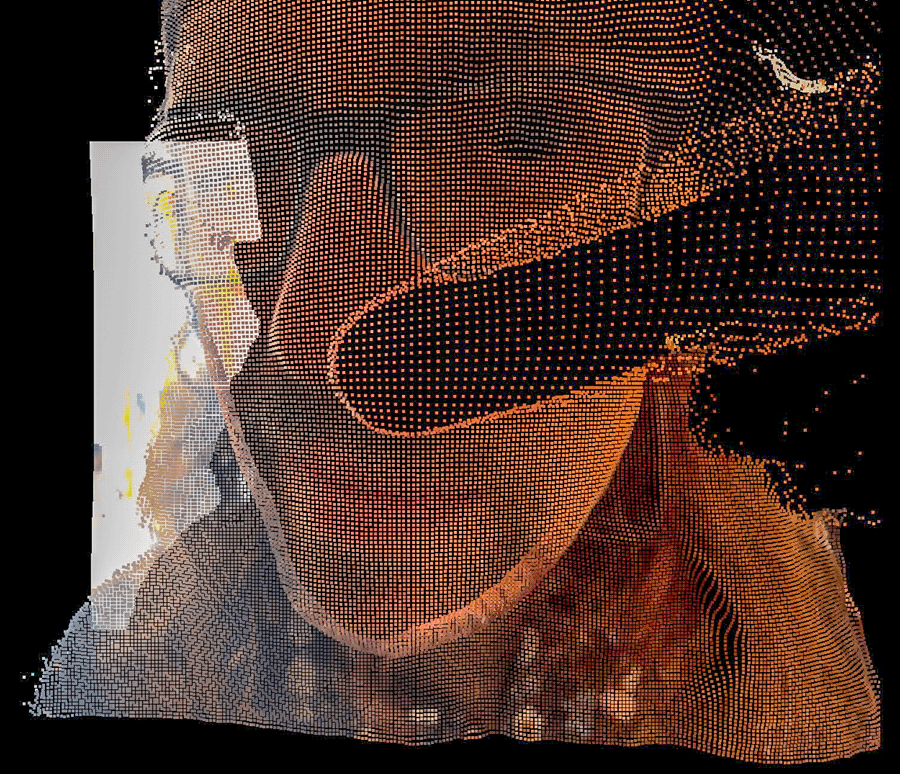

It works by using the depth data from my iPhone’s camera combined with the video feed from the camera. Every frame, it generates a dot per pixel of the video feed, and places the dot in 3d space using the depth data to control the z value.

The depth data is really inconsistent and low-res, with weird sampling / interpolation artifacts. So it produces beautiful results!

Here are some images from the LA Ink & Switch Unconf.

I needed a depth-punny name, so I just went with an MBV reference (warning: flashing).